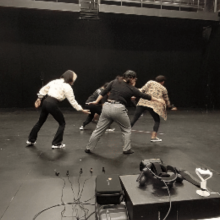

This research laboratory invited participants from Drama, Dance, Music and DXARTS departments, to test and explore Extended Reality systems for the formulation and implementation of performative scores and interactions for human-machine improvisations. It made use of a series of accessible and interchangeable algorithms that transform off the shelf, consumer available devices into tools for creative research beyond their intended uses or industries (e.g. gaming industry, surveillance and robotics).

By facilitating a space for access to various technologies and augmenting their interdisciplinary reach, the laboratory endeavored to redirect time and focus on the testing and deployment of unique performative prototypes and languages that explore the possibilities of extended and technologically mediated performative languages. The active collaboration enacted throughout this experiential research laboratory yielded valuable collaborative discoveries, applications and further conceptual enquiries, subsequently expanding the horizons for future interdisciplinary research initiatives in which divergent storytelling and logic structures can inform the creation of complex projects at the intersections of interactive installations, expanded cinema and live performances.

Overview of the laboratory Software:

• Unity Game Engine: Handling spatial computation and real-time motion capture.

• TouchDesigner: Python-based visual programming interface for multimedia and Computer

Vision.

• Supercollider: Audio synthesis and algorithmic composition.

• MaxMSP: Visual programming language for music and multimedia.

Virtual-reality interactions:

• Mapping the performative space with VR sensors.

• Position and motion tracking sensors.

• Manipulation of virtual elements such as sound, objects and physical simulations.

• Real-time interactions with sound and visuals.

• Immersive sound spatialization.

Team:

• XR programming, wearables set-up and ML: Laura Luna Castillo

• Ambisonics, DSP and ML: Daniel Peterson

• Ambisonic sound sources/Archive: Ewa Trębacz

• Documentation and Stage Manager: Maria Thrän

Performers:

• Carolina Marin

• Derek Crescenti

• Emily Schoen Branch

• Ashley Menestrina

• Ewa Trębacz

Participants:

• Students from DANCE 217 (30 students) with Derek Crescenti

• Students from DX482 (7 students) - with Professor Tivon Rice, Cristina Brambila & Eunsun

Choi

• Students from DX490 (5 students) - with Professor Richard Karpen and Wei Yang

• Grad students from DRAMA (13 students) - with Professor Adrienne Mackie